The BI Platform That They Already Own

So, now that we've seen some of the basic capabilities of Pentaho Analysis Services (aka Mondrian), let's check the other side of the ring where SQL Server Analysis Services (SSAS) sits. The entire SQL Server BI stack is a very interesting case. Starting with SQL Server 2005, Microsoft began packaging their entire BI suite with a Standard Edition license, and I mean the whole shebang. SSIS, SSAS, SSRS, SSMS, and BIDS...the whole gang to satisfy all data analytic needs. Apparently, this was not emphasized enough in the literature for Microsoft SQL Server, because more often than not, this can be news to IT folks in enterprises. Usually, it's good news, as the company may have already made a sizeable investment in SQL Server, and the icing on the cake is a world-class BI platform.

So, now that we've seen some of the basic capabilities of Pentaho Analysis Services (aka Mondrian), let's check the other side of the ring where SQL Server Analysis Services (SSAS) sits. The entire SQL Server BI stack is a very interesting case. Starting with SQL Server 2005, Microsoft began packaging their entire BI suite with a Standard Edition license, and I mean the whole shebang. SSIS, SSAS, SSRS, SSMS, and BIDS...the whole gang to satisfy all data analytic needs. Apparently, this was not emphasized enough in the literature for Microsoft SQL Server, because more often than not, this can be news to IT folks in enterprises. Usually, it's good news, as the company may have already made a sizeable investment in SQL Server, and the icing on the cake is a world-class BI platform.

Now, for those companies who haven't already made an investment in SQL Server, the barrier to entry for SQL Server BI may be the price of a license. After all, the bulk of the license fee pays for the RDBMS, one would argue. However, this does not diminish the value of the SQL Server BI stack. This platform has come a long way since Analysis Services was first revealed in SQL Server 2000, and we will take a deep dive into the capabilities of the latest features within SQL Server 2008 R2 Business Intelligence.

SQL Server Analysis Services (SSAS)

Setup

- Windows 7 Home Premium (64-bit)

- 8 GB RAM

- Microsoft SQL Server 2008 R2 (SSAS, SSIS, SSRS, SSMS, BIDS)

- Source Control Link: Click Here

- SQL Server Management Studio (aka SSMS, desktop application)

- Business Intelligence Development Studio (aka BIDS, desktop IDE)

This setup of SQL Server 2008 BI collocates both the relational database system with SSAS, as with our Mondrian deep dive. Now, you may be saying to yourself, the resources available for SQL Server 2008 BI is considerably more than what we offered on a meager VM for PAS. However, this shoot-out isn't designed to empirically prove which platform is faster -- the intent here is to see which product ,in terms of end-to-end development and consumption of BI, has the edge over the other. So, we're talking ease of development, usability, and product cohesion versus "how many milliseconds did it take to bring back those 200,000 tuples".

With that in mind, setup of a collocated SQL Server BI platform is also a cinch. Just simply follow the steps on the SQL Server install (we chose default options, apart from our instance names, which we specified as SQLDEV01), let the install run, grab a cup of tea, then come back to see SQL Server 2008 R2 running on your machine. (Of course, when deploying to a production environment, the install gets a little more involved, with ActiveDirectory identities, RDBMS collation settings, default file locations, etc.)

|

| SQL Server Business Intelligence on one server |

As far as a readily deployed UI for ad-hoc analytics (such as Pentaho User Console), SQL Server does not have something out of the box. It would be neat if Microsoft, in a future release, would have a Pentaho User Console equivalent -- perhaps a Sharepoint site with PowerView and PowerPivot.

Like PAS, SSAS runs as a service, persisting and caching calculations in memory to enable fast analysis. Each SSAS database (equivalent to a PAS schema) has a plethora of options (as we will see later) to enable efficient partitioning, scaling, and caching. In terms of connectivity to SSAS databases, you've got the usual suspects: default TCP ports on which SSAS listens (2383 by default) and XMLA or SOAP calls via IIS.

As with the PAS review, I have produced all the artifacts that we cover here on my source control system. If you have a Subversion client, point to https://edg.sourcerepo.com/edg/SQLServerAdventureWorks

Like PAS, SSAS runs as a service, persisting and caching calculations in memory to enable fast analysis. Each SSAS database (equivalent to a PAS schema) has a plethora of options (as we will see later) to enable efficient partitioning, scaling, and caching. In terms of connectivity to SSAS databases, you've got the usual suspects: default TCP ports on which SSAS listens (2383 by default) and XMLA or SOAP calls via IIS.

As with the PAS review, I have produced all the artifacts that we cover here on my source control system. If you have a Subversion client, point to https://edg.sourcerepo.com/edg/SQLServerAdventureWorks

Now that we've got the technical rundown covered, let's dive right into some development!

Connection and Schema Creation

In PAS, we mentioned that a power user can develop their own cubes directly on Pentaho User Console or a developer can deploy a proper schema for consumption by many stakeholders. The same could be said about the SQL Server BI stack. However, it's not quite as cohesive as Pentaho. For a power-user who wants to create cubes for quick and dirty analysis, Microsoft offers PowerPivot for Excel. While technically not a part of the SQL Server BI stack (it's obviously an Excel product), it offers the same functionality as the Pentaho User Console in allowing users to define quick one-off schemas for data analysis.

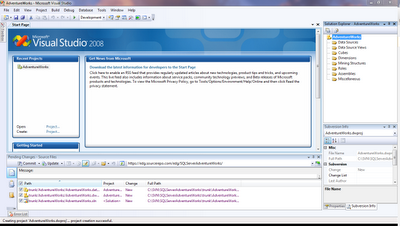

We're more concerned about wrapping BI in a development lifecycle in this comparo, so with that in mind, we go straight to the heart of SQL Server BI development, Business Intelligence Development Studio (BIDS). BIDS is simply the Visual Studio 2008 IDE with templates designed specifically for SQL Server BI. You've got projects for SSAS, SSIS, and SSRS all in a neat, tidy package.

|

| Ready-made, world-class IDE for Business Intelligence development |

For those who develop Microsoft products, this is tremendous, as you are working within a familiar IDE. For developers from other platforms, I can assure you that you will start to love Visual Studio when working with SQL Server BI (take it from a guy who also loves Eclipse).

The first thing we must do is to create a new SSAS project. So, upon launching BIDS, we select Analysis Services Project and for a Name, let's call it the obvious (AdventureWorks). This creates a proper project for you to begin SSAS development. On the Solution Explorer, you will see subfolders for various artifacts that SSAS expects.

The subfolders are actually arranged vertically by the logical steps you would take to create SSAS cubes. The first thing we must establish is a connection to our RDBMS data mart. This will be accomplished by right clicking on Data Sources, then clicking New Data Source. The Data Source Wizard comes up and guides you through the connection setup process.

On the "Select how you define the connection" step, we would click on the "New..." button which will launch a Connection Manager window which should be familiar to Microsoft application developers. The server name for this case is localhost\SQLDEV01 using Windows Authentication. We then select the AdventureWorksDW2008 database which already resides on the instance. Click Test Connection to ensure connectivity, then click OK.

Hit Next and then we come to the step which asks for impersonation information for this SSAS database. This is very important, as this is the user context on which the SSAS database will attempt connectivity to the relational data mart. For the purposes of this exercise (and for simplicity's sake), since the service is running as Local System, I simply select "Use the service account" then click Next. In a Production setting, you would most likely use some kind of domain service account for the user context.

The last step is to define the name for this Data Source, so let's call it Adventure Works Data Warehouse, then click Finish. Voila, the foundation for our cube and dimension development has been laid down.

Cube Creation

So, now, let's define our analytic structure. In SSAS, this is first accomplished by creating Data Source View against a Data Source. This Data Source View essentially is the star schema by which cubes would adhere. You can define your entire star schema on one Data Source View or segment it at your discretion. The Data Source View also acts as an abstraction/alias layer, where you may rename the names of the relational tables and/or table columns to terms that are relevant to your business stakeholders. This is the concept of a "FriendlyName" within SSAS.

For simplicity's sake, let's define the entire star schema of Adventure Works in one Data Source View. We right click on Data Source Views then click on New Data Source View to begin. Another friendly wizard pops up to guide us on our way.

It first asks for the Data Source for the view -- we will select the Adventure Works Data Warehouse source that we had created in the prior step.

In the second step, the wizard asks for the tables/views to be included in the view. To answer a possible question, yes, it is entirely possible to create an SSAS cube against an existing relational data source that has Views built on top of it to simulate a star schema. However, this is generally not recommended, as a core tenet of a DW/BI solution is to minimize the hits to your source relational database. For more reading on the subject, I would recommend the usual suspects: The Data Warehousing Toolkit and the Microsoft Data Warehousing Toolkit.

For the purposes of this exercise, we will select the Dimension and Fact tables as they appear on the relational source and bring them over. Click Next.

Finally, we assign a name to the Data Source View. To be descriptive, let's call this Adventure Works Full Dimensional Model then click Finish.

The neat part about a Data Source View in SSAS is that it automatically determines the relationships between your Dimension and Fact tables based on the relationships established on the relational database. So, as an example, on the AdventureWorksDW2008 database, there is a foreign key constraint on the FactInternetSales table on the CurrencyKey column that points its parent table to the DimCurrency table's CurrencyKey column. You see the same relationship established on the DSV. In short, SSAS tries not to have you repeat your work that you did on the data mart.

You can go crazy with the FriendlyNames here on the DSV. In fact, most businesses would generally request friendlier names for the Dimension and Fact Tables (for example, "Reseller Sales" instead of "FactResellerSales", "Employee" instead of "DimEmployee", etc.), but for the purposes of this exercise, we're going to leave it as-is. Now, let's create some cubes against this Data Source View. This is accomplished by right clicking on Cubes, then clicking on New Cube. The Cube Wizard pops up and on the second step for the Select Creation Method, we select the Use existing tables option then click Next.

On the next step, the Select Measure Group Tables, we will select a Fact table...for this example, we'll start with FactResellerSales as we did with PAS. Click Next.

In the next step, the wizard breaks down the fact table by the measure groups detected (basically anything numeric). You can de-select any fields that may actually be degenerate dimension fields -- in this fact table's case, Revision Number would be such a field, so we deselect that field for the measure groups. Click Next.

In the following step, the wizard tries to detect the dimensions that must be created based on the star schema of the sourcs DSV. We will leave this as-is, as it detects all the dimensions and subdimensions properly, thanks to a well-constructed data mart. Click Next.

Finally, we are to give the cube a name. Let's call this one Reseller Sales. Click Finish.

SSAS creates the cube along with skeleton dimensions (just the dimension keys as attributes). We'll get to the dimensional attributes later. For now, let's ensure our cube is setup properly. First, let's make sure that the appropriate measure groups round off to the nearest hundredth decimal place. This is accomplished by expanding the Fact Reseller Sales node on the Measures pane (upper left hand corner in the Cube Structure window), selecting a measure, then setting its FormatString property to #,##0.00;-#,##0.00. We'll do this for all the measure groups in Fact Reseller Sales except for Fact Reseller Sales Count.

Just being nitpicky here, but I'd like a future release to have the ability to select many measure groups and being able to set common properties to the same value. It's certainly not a dealbreaker, but it can get fairly annoying when you have a fact table of many measures.

For now, let's Build and Deploy the cube and dimensions as they stand so that BIDS' metadata gets refreshed and we can work with our model in a more dynamic fashion. First, let's make sure we are deploying to the local SSAS instance by right clicking on the AdventureWorks solution then clicking Properties. In the Deployment subwindow, the Target Server should be localhost\SQLDEV01 and the Database should be AdventureWorks.

Click on Build --> Build AdventureWorks. We shouldn't receive any errors. Finally, click on Build --> Deploy AdventureWorks. After a quick deployment, we're all set for further development.

Click on Browser to refresh the metadata within the IDE for dynamic development.

Creating Calculations

As with PAS, creating calculations in SSAS is a straightforward process. Click on the Calculations subwindow. Then right click in the Script Organizer pane and select "New Calculated Member". The Name of the Calculated Member will be [Net Profit Or Loss].

As we defined in the PAS exercise, this calculated member simply subtracts Total Product Cost from Extended Amount. Since we deployed and refreshed the metadata of our cube, BIDS offers a nice drag and drop feature from the measure groups for this calculated member. We simply expand the Measures node and first drag over Extended Amount to the Expression text box (which results in [Measures].[Extended Amount] to be written). Type in a dash for the minus sign then drag over Total Product Cost.

In the Format String, use the same "#,##0.00;-#,##0.00" formatting (do not forget to include the double quotes in this case) we used on the measure groups. For Non-empty behavior (in other words, what measure group's non-empty behavior it would mimic), select Extended Amount and Total Product Cost. Click File --> Save All, and the calculated member is set!

Dimension Creation

Now that we have the basic setup of the cube complete, we can move onto hashing out the skeleton dimensions that were created for us. To say the creation and editing of dimensions in BIDS is easier compared to the Pentaho Schema Workbench would be a severe understatement.

For starters, the Dimensions are already created for us in SSAS upon creation of the cube. As mentioned previously, these skeleton dimensions simply have their primary keys as the attributes, but BIDS allows the ability to intuitively add attributes from the source to the dimension.

We'll use the Dim Sales Territory dimension as an example. Let's double click on Dim Sales Territory under Dimensions. There are four subwindows on this view -- Dimension Structure, Attribute Relationships, Translations, and Browser. Dimension Structure is the default view and is the place where we will define what attributes are to be included in the Dim Sales Territory dimension.

In this Dimension Structure view are three self-explanatory areas: Attributes, Hierarchies, and Data Source View. To add an attribute to the dimension from the source, one must simply select what attributes to be added from the Data Source View and drag/drop these fields to the Attributes area. For Dim Sales Territory, we will move the rest of the fields from the Data Source View over to the Attributes area. Repeat this process for all the dimensions that have been created.

Creating Hierarchies

Once attributes have been defined, to define hierarchies, it will be the same drag/drop principle, except this time, you will be dragging individual Attributes to the Hierarchies area, in order from parent to child. For this particular hierarchy, as the Sales Territory Group is the parent of Sales Territory Country and Sales Territory Country is the parent of Sales Territory Region. We define this hierarchy as such.

You can rename the Hierarchy once it has been established by right clicking on it and going to Properties. We'll call this hierarchy Sales Territories.

One last step in defining this hierarchy is to define the Attribute Relationship for the hierarchy. This enables SSAS to effectively and efficiently calculate this hierarchy during processing, and thus, it will make aggregations against this hierarchy be considerably faster.

To establish the desired Attribute Relationship, we click on the Attribute Relationships pane. At first, we see that there is only attribute relationships defined between the Sales Territory Key and the dimensional attributes we dragged over earlier.

Attribute Relationships are read to be many to one from left to right. So, for the example above, the Attribute Relationship should be read as "Many Sales Territory Keys are associated to one Sales Territory Group", etc. We want to further hash this Attribute Relationship out to include the hierarchy we created.

Conceptually speaking, we want to design the relationship such that you can read it as: "Many Sales Territory Regions are associated to one Sales Territory Country, and many Sales Territory Countries are associated to one Sales Territory Group".

To implement this, we first start at the lowest level, Sales Territory Region. Right click on this node then click on New Attribute Relationship. On the Related Attribute dropdown, select Sales Territory Country. Leave the Relationship type as-is (Flexible). Click OK. Repeat the same step with the Sales Territory Country node, except the Related Attribute should now be Sales Territory Group.

Save the dimension, build the solution, and deploy it. Now, if we go back to the Reseller Sales cube and go to Browser, you will see the Sales Territories Hierarchy defined in the Dim Sales Territory dimension.

Applying Security

SSAS has an incredibly robust security system. The database is secured by a Roles-based system, where one can define what users can access what cubes. We can even get to the granular level and define what dimensions or what tuples specific users can see (though, Microsoft generally recommends making your security definitions broad).

Since this topic covers all manner of sins, I will refer you to the TechNet lesson which covers all the basics. Suffice to say, this security model should fulfill the vast majority of restricted access needs of an organization.

Consumption of Data

So, now we have a nice, fully hashed out Dimensional Model with a Reseller Sales cube properly defined. We do one last build and deploy, and our SSAS database is now ready for some slicing and dicing!

The primary user interface for SSAS really is Microsoft Excel (2010 version in this example). It is the platform of choice for most data analysts in enterprises, and quite frankly, it is one of the best ad-hoc data analytic tools, period. This is the one area where SQL Server 2008 BI kind of falls short comparatively to Pentaho. As previously mentioned, Pentaho's main paradigm is everything through the Pentaho User Console first. SQL Server 2008 BI requires a little work to get a common interface up and running, specifically with tools like Sharepoint Server and PowerPivot for Sharepoint.

With regards to Excel 2010, establishing a connection to our SSAS cube is straightforward. First, go to the Data ribbon and select "From Other Sources". In the submenu, select Analysis Services. The server name for this example is localhost\SQLDEV01 and we will use Windows Authentication.

Upon hitting Next, we see our deployed AdventureWorks database and the Reseller Sales cube available. Select the Reseller Sales cube then hit Finish.

Excel asks what kind of report we want, and we'll use a plain ol' PivotTable Report for this example. We'll drop the table on $A$1 and hit OK. What appears next is very familiar to Excel users, the Pivot Table Field List. It is broken down by the measures table first followed by the associated dimensions.

From here, slicing and dicing is as simple as dragging and dropping measures and dimensional attributes to the appropriate axes or to the values section. First, let's see Net Profit or Loss by Department Name (from the Dim Employee dimension) and broken down by Fiscal Years across the columns. We'll select Net Profit or Loss from the measures and then drag Department Name to the Row Labels and Fiscal Year to the Column Labels.

Easy peasy. The Excel 2010 PivotTable is the most powerful and primary way most "power data analysts" will access the cubes. It allows the ad-hoc functionality to answer most data analytic questions in whatever format the data analyst desires. Because it is native to Excel 2010, all functionality of Excel 2010 is also at the disposal of the data analyst, which some may argue is the best AND worst part about opening up Excel 2010 as the primary front-end for SSAS.

Since this overview only covers SSAS, we will not go into detail about SSRS, but one should understand that SSRS is the primary front-end for parameterized static reports against the SSAS cubes. That is, the report format itself will not change, but users would need the functionality to see different cuts of the data on the report format. One could argue the same functionality could be replicated in Excel -- however, SSRS offers niceties such as application level security and scheduled distribution of reports. In most SQL Server BI implementation I'd been involved with, a combination of Excel and SSRS usually does the trick for a majority of users.

Consumption of Data Summary

While PAS comes tightly coupled with Pentaho User Console, SSAS and SQL Server BI does not have a "one stop shop" for users to immediately access BI artifacts. The primary bare-bones method for accessing cube data is via Excel 2010, and SSRS reports could be built on top of the SSAS cubes to allow users the ability to have parameterized static reports.

This setup isn't necessarily a bad thing, as not all BI implementations are created equal. However, a common front-end like Pentaho User Console would be most helpful to make BI implementations on SQL Server a little more agile with regards to rapid prototyping. On the flip side of the coin, most of the audience for a BI tool would be ecstatic with the capabilities granted on their "home turf" platform (Excel), so such a user interface would be more of a nice-to-have.

In terms of consuming the data itself, it is incredibly intuitive within Excel 2010. Instead obtaining knowledge of, say, ANSI SQL querying, data analysts need only understand how the dimensional model is structured and how a PivotTable Report works, and many analytic questions can be answered. In short, if you're walking into a Microsoft shop that needs BI, there are very few reasons why you SHOULDN'T use SQL Server BI.

SQL Server Analysis Services: The Good, The Bad, and The Weird

The Good

The Bad

SQL Server Analysis Services: The Summary

I honestly think, apart from minor quabbles, that Microsoft has hit their BI offering out of the park. While platforms such as TM-1 or EssBase may have the leg up in terms of flexibility under the hood of the analytic engine, SSAS has succeeded in wrapping the complicated concept of BI development and consumption into an easy-to-use package. While arguments can be made for the merits or shortcomings of requiring a full SQL Server license for the BI stack, I actually think that the tight coupling is needed.

A BI solution needs to be coherent not only on the end-product but also for development. In my opinion, tightly coupling the components with the SQL Server database engine ensures the best functionality of SSAS (and the other BI components) out of the gate. Gone are the days when developers need to troubleshoot why the analytic engine doesn't play nicely with the database engine, or why the reporting front-end has some quirks obtaining data from the analytic engine. SQL Server BI's strongest trait is its coherence.

If you have a Microsoft shop with an investment already made in SQL Server, choosing SSAS as the primary analytic engine (as well as the rest of the SQL Server BI stack for other functionality) should be a no-brainer. If your organization has not made an investment in SQL Server, but is otherwise a heavy Microsoft shop, SSAS is still definitely worth a look. While the cost is a factor, comparable offerings from vendors such as Oracle or SAP would probably run you the same amount and would not have the luxury of tight integration with other Microsoft offerings. Make no mistake, SSAS is a world-class analytic platform that I would not hesitate recommending.

The first thing we must do is to create a new SSAS project. So, upon launching BIDS, we select Analysis Services Project and for a Name, let's call it the obvious (AdventureWorks). This creates a proper project for you to begin SSAS development. On the Solution Explorer, you will see subfolders for various artifacts that SSAS expects.

|

| A blank SSAS 2008 Project |

On the "Select how you define the connection" step, we would click on the "New..." button which will launch a Connection Manager window which should be familiar to Microsoft application developers. The server name for this case is localhost\SQLDEV01 using Windows Authentication. We then select the AdventureWorksDW2008 database which already resides on the instance. Click Test Connection to ensure connectivity, then click OK.

Hit Next and then we come to the step which asks for impersonation information for this SSAS database. This is very important, as this is the user context on which the SSAS database will attempt connectivity to the relational data mart. For the purposes of this exercise (and for simplicity's sake), since the service is running as Local System, I simply select "Use the service account" then click Next. In a Production setting, you would most likely use some kind of domain service account for the user context.

The last step is to define the name for this Data Source, so let's call it Adventure Works Data Warehouse, then click Finish. Voila, the foundation for our cube and dimension development has been laid down.

|

| Ready to rock! |

So, now, let's define our analytic structure. In SSAS, this is first accomplished by creating Data Source View against a Data Source. This Data Source View essentially is the star schema by which cubes would adhere. You can define your entire star schema on one Data Source View or segment it at your discretion. The Data Source View also acts as an abstraction/alias layer, where you may rename the names of the relational tables and/or table columns to terms that are relevant to your business stakeholders. This is the concept of a "FriendlyName" within SSAS.

For simplicity's sake, let's define the entire star schema of Adventure Works in one Data Source View. We right click on Data Source Views then click on New Data Source View to begin. Another friendly wizard pops up to guide us on our way.

It first asks for the Data Source for the view -- we will select the Adventure Works Data Warehouse source that we had created in the prior step.

In the second step, the wizard asks for the tables/views to be included in the view. To answer a possible question, yes, it is entirely possible to create an SSAS cube against an existing relational data source that has Views built on top of it to simulate a star schema. However, this is generally not recommended, as a core tenet of a DW/BI solution is to minimize the hits to your source relational database. For more reading on the subject, I would recommend the usual suspects: The Data Warehousing Toolkit and the Microsoft Data Warehousing Toolkit.

For the purposes of this exercise, we will select the Dimension and Fact tables as they appear on the relational source and bring them over. Click Next.

Finally, we assign a name to the Data Source View. To be descriptive, let's call this Adventure Works Full Dimensional Model then click Finish.

|

| Star schema relationships defined in the view? Why thank you! |

You can go crazy with the FriendlyNames here on the DSV. In fact, most businesses would generally request friendlier names for the Dimension and Fact Tables (for example, "Reseller Sales" instead of "FactResellerSales", "Employee" instead of "DimEmployee", etc.), but for the purposes of this exercise, we're going to leave it as-is. Now, let's create some cubes against this Data Source View. This is accomplished by right clicking on Cubes, then clicking on New Cube. The Cube Wizard pops up and on the second step for the Select Creation Method, we select the Use existing tables option then click Next.

On the next step, the Select Measure Group Tables, we will select a Fact table...for this example, we'll start with FactResellerSales as we did with PAS. Click Next.

In the next step, the wizard breaks down the fact table by the measure groups detected (basically anything numeric). You can de-select any fields that may actually be degenerate dimension fields -- in this fact table's case, Revision Number would be such a field, so we deselect that field for the measure groups. Click Next.

In the following step, the wizard tries to detect the dimensions that must be created based on the star schema of the sourcs DSV. We will leave this as-is, as it detects all the dimensions and subdimensions properly, thanks to a well-constructed data mart. Click Next.

Finally, we are to give the cube a name. Let's call this one Reseller Sales. Click Finish.

SSAS creates the cube along with skeleton dimensions (just the dimension keys as attributes). We'll get to the dimensional attributes later. For now, let's ensure our cube is setup properly. First, let's make sure that the appropriate measure groups round off to the nearest hundredth decimal place. This is accomplished by expanding the Fact Reseller Sales node on the Measures pane (upper left hand corner in the Cube Structure window), selecting a measure, then setting its FormatString property to #,##0.00;-#,##0.00. We'll do this for all the measure groups in Fact Reseller Sales except for Fact Reseller Sales Count.

Just being nitpicky here, but I'd like a future release to have the ability to select many measure groups and being able to set common properties to the same value. It's certainly not a dealbreaker, but it can get fairly annoying when you have a fact table of many measures.

For now, let's Build and Deploy the cube and dimensions as they stand so that BIDS' metadata gets refreshed and we can work with our model in a more dynamic fashion. First, let's make sure we are deploying to the local SSAS instance by right clicking on the AdventureWorks solution then clicking Properties. In the Deployment subwindow, the Target Server should be localhost\SQLDEV01 and the Database should be AdventureWorks.

Click on Build --> Build AdventureWorks. We shouldn't receive any errors. Finally, click on Build --> Deploy AdventureWorks. After a quick deployment, we're all set for further development.

Click on Browser to refresh the metadata within the IDE for dynamic development.

Creating Calculations

As with PAS, creating calculations in SSAS is a straightforward process. Click on the Calculations subwindow. Then right click in the Script Organizer pane and select "New Calculated Member". The Name of the Calculated Member will be [Net Profit Or Loss].

As we defined in the PAS exercise, this calculated member simply subtracts Total Product Cost from Extended Amount. Since we deployed and refreshed the metadata of our cube, BIDS offers a nice drag and drop feature from the measure groups for this calculated member. We simply expand the Measures node and first drag over Extended Amount to the Expression text box (which results in [Measures].[Extended Amount] to be written). Type in a dash for the minus sign then drag over Total Product Cost.

In the Format String, use the same "#,##0.00;-#,##0.00" formatting (do not forget to include the double quotes in this case) we used on the measure groups. For Non-empty behavior (in other words, what measure group's non-empty behavior it would mimic), select Extended Amount and Total Product Cost. Click File --> Save All, and the calculated member is set!

Dimension Creation

Now that we have the basic setup of the cube complete, we can move onto hashing out the skeleton dimensions that were created for us. To say the creation and editing of dimensions in BIDS is easier compared to the Pentaho Schema Workbench would be a severe understatement.

For starters, the Dimensions are already created for us in SSAS upon creation of the cube. As mentioned previously, these skeleton dimensions simply have their primary keys as the attributes, but BIDS allows the ability to intuitively add attributes from the source to the dimension.

We'll use the Dim Sales Territory dimension as an example. Let's double click on Dim Sales Territory under Dimensions. There are four subwindows on this view -- Dimension Structure, Attribute Relationships, Translations, and Browser. Dimension Structure is the default view and is the place where we will define what attributes are to be included in the Dim Sales Territory dimension.

In this Dimension Structure view are three self-explanatory areas: Attributes, Hierarchies, and Data Source View. To add an attribute to the dimension from the source, one must simply select what attributes to be added from the Data Source View and drag/drop these fields to the Attributes area. For Dim Sales Territory, we will move the rest of the fields from the Data Source View over to the Attributes area. Repeat this process for all the dimensions that have been created.

Creating Hierarchies

Once attributes have been defined, to define hierarchies, it will be the same drag/drop principle, except this time, you will be dragging individual Attributes to the Hierarchies area, in order from parent to child. For this particular hierarchy, as the Sales Territory Group is the parent of Sales Territory Country and Sales Territory Country is the parent of Sales Territory Region. We define this hierarchy as such.

You can rename the Hierarchy once it has been established by right clicking on it and going to Properties. We'll call this hierarchy Sales Territories.

One last step in defining this hierarchy is to define the Attribute Relationship for the hierarchy. This enables SSAS to effectively and efficiently calculate this hierarchy during processing, and thus, it will make aggregations against this hierarchy be considerably faster.

To establish the desired Attribute Relationship, we click on the Attribute Relationships pane. At first, we see that there is only attribute relationships defined between the Sales Territory Key and the dimensional attributes we dragged over earlier.

Attribute Relationships are read to be many to one from left to right. So, for the example above, the Attribute Relationship should be read as "Many Sales Territory Keys are associated to one Sales Territory Group", etc. We want to further hash this Attribute Relationship out to include the hierarchy we created.

Conceptually speaking, we want to design the relationship such that you can read it as: "Many Sales Territory Regions are associated to one Sales Territory Country, and many Sales Territory Countries are associated to one Sales Territory Group".

To implement this, we first start at the lowest level, Sales Territory Region. Right click on this node then click on New Attribute Relationship. On the Related Attribute dropdown, select Sales Territory Country. Leave the Relationship type as-is (Flexible). Click OK. Repeat the same step with the Sales Territory Country node, except the Related Attribute should now be Sales Territory Group.

Save the dimension, build the solution, and deploy it. Now, if we go back to the Reseller Sales cube and go to Browser, you will see the Sales Territories Hierarchy defined in the Dim Sales Territory dimension.

|

| A very nice hierarchy! |

SSAS has an incredibly robust security system. The database is secured by a Roles-based system, where one can define what users can access what cubes. We can even get to the granular level and define what dimensions or what tuples specific users can see (though, Microsoft generally recommends making your security definitions broad).

Since this topic covers all manner of sins, I will refer you to the TechNet lesson which covers all the basics. Suffice to say, this security model should fulfill the vast majority of restricted access needs of an organization.

Consumption of Data

So, now we have a nice, fully hashed out Dimensional Model with a Reseller Sales cube properly defined. We do one last build and deploy, and our SSAS database is now ready for some slicing and dicing!

The primary user interface for SSAS really is Microsoft Excel (2010 version in this example). It is the platform of choice for most data analysts in enterprises, and quite frankly, it is one of the best ad-hoc data analytic tools, period. This is the one area where SQL Server 2008 BI kind of falls short comparatively to Pentaho. As previously mentioned, Pentaho's main paradigm is everything through the Pentaho User Console first. SQL Server 2008 BI requires a little work to get a common interface up and running, specifically with tools like Sharepoint Server and PowerPivot for Sharepoint.

With regards to Excel 2010, establishing a connection to our SSAS cube is straightforward. First, go to the Data ribbon and select "From Other Sources". In the submenu, select Analysis Services. The server name for this example is localhost\SQLDEV01 and we will use Windows Authentication.

Upon hitting Next, we see our deployed AdventureWorks database and the Reseller Sales cube available. Select the Reseller Sales cube then hit Finish.

Excel asks what kind of report we want, and we'll use a plain ol' PivotTable Report for this example. We'll drop the table on $A$1 and hit OK. What appears next is very familiar to Excel users, the Pivot Table Field List. It is broken down by the measures table first followed by the associated dimensions.

From here, slicing and dicing is as simple as dragging and dropping measures and dimensional attributes to the appropriate axes or to the values section. First, let's see Net Profit or Loss by Department Name (from the Dim Employee dimension) and broken down by Fiscal Years across the columns. We'll select Net Profit or Loss from the measures and then drag Department Name to the Row Labels and Fiscal Year to the Column Labels.

Easy peasy. The Excel 2010 PivotTable is the most powerful and primary way most "power data analysts" will access the cubes. It allows the ad-hoc functionality to answer most data analytic questions in whatever format the data analyst desires. Because it is native to Excel 2010, all functionality of Excel 2010 is also at the disposal of the data analyst, which some may argue is the best AND worst part about opening up Excel 2010 as the primary front-end for SSAS.

Since this overview only covers SSAS, we will not go into detail about SSRS, but one should understand that SSRS is the primary front-end for parameterized static reports against the SSAS cubes. That is, the report format itself will not change, but users would need the functionality to see different cuts of the data on the report format. One could argue the same functionality could be replicated in Excel -- however, SSRS offers niceties such as application level security and scheduled distribution of reports. In most SQL Server BI implementation I'd been involved with, a combination of Excel and SSRS usually does the trick for a majority of users.

Consumption of Data Summary

While PAS comes tightly coupled with Pentaho User Console, SSAS and SQL Server BI does not have a "one stop shop" for users to immediately access BI artifacts. The primary bare-bones method for accessing cube data is via Excel 2010, and SSRS reports could be built on top of the SSAS cubes to allow users the ability to have parameterized static reports.

This setup isn't necessarily a bad thing, as not all BI implementations are created equal. However, a common front-end like Pentaho User Console would be most helpful to make BI implementations on SQL Server a little more agile with regards to rapid prototyping. On the flip side of the coin, most of the audience for a BI tool would be ecstatic with the capabilities granted on their "home turf" platform (Excel), so such a user interface would be more of a nice-to-have.

In terms of consuming the data itself, it is incredibly intuitive within Excel 2010. Instead obtaining knowledge of, say, ANSI SQL querying, data analysts need only understand how the dimensional model is structured and how a PivotTable Report works, and many analytic questions can be answered. In short, if you're walking into a Microsoft shop that needs BI, there are very few reasons why you SHOULDN'T use SQL Server BI.

SQL Server Analysis Services: The Good, The Bad, and The Weird

The Good

- Development process is a cinch comparatively to PAS. BIDS is uses the world-class Visual Studio environment, and the dimensional modeling components for SSAS are highly intuitive.

- Tight integration with Excel 2010. Considering Excel is the dominant data analytic tool in the market, this is a boon for developers. Data can be easily brought in and any number of creative solutions off of Excel can be formulated for business use.

- SSAS scales very nicely. It is very fast out of the gate, but if your implementation calls for massive data marts and cubes, SSAS offers the ability to scale out across servers.

- The security model is vastly superior to PAS

The Bad

- Licensing. Want SSAS by itself? You'll need to buy a full SQL Server license. Smaller enterprises may be swayed away due to the cost of entry, and will essentially pay for components they may not use (such as the database engine, SSIS, SSRS, etc)

- No common user interface for SQL Server BI out of the gate. I think if Microsoft came up with a Pentaho User Console concept for SQL Server BI, it will add tremendous value.

- Why can't we multi-select measures on a cube in BIDS to edit common properties?

SQL Server Analysis Services: The Summary

I honestly think, apart from minor quabbles, that Microsoft has hit their BI offering out of the park. While platforms such as TM-1 or EssBase may have the leg up in terms of flexibility under the hood of the analytic engine, SSAS has succeeded in wrapping the complicated concept of BI development and consumption into an easy-to-use package. While arguments can be made for the merits or shortcomings of requiring a full SQL Server license for the BI stack, I actually think that the tight coupling is needed.

A BI solution needs to be coherent not only on the end-product but also for development. In my opinion, tightly coupling the components with the SQL Server database engine ensures the best functionality of SSAS (and the other BI components) out of the gate. Gone are the days when developers need to troubleshoot why the analytic engine doesn't play nicely with the database engine, or why the reporting front-end has some quirks obtaining data from the analytic engine. SQL Server BI's strongest trait is its coherence.

If you have a Microsoft shop with an investment already made in SQL Server, choosing SSAS as the primary analytic engine (as well as the rest of the SQL Server BI stack for other functionality) should be a no-brainer. If your organization has not made an investment in SQL Server, but is otherwise a heavy Microsoft shop, SSAS is still definitely worth a look. While the cost is a factor, comparable offerings from vendors such as Oracle or SAP would probably run you the same amount and would not have the luxury of tight integration with other Microsoft offerings. Make no mistake, SSAS is a world-class analytic platform that I would not hesitate recommending.

2 comments:

Thank you very much

BinhTruong

Hi, Great.. Tutorial is just awesome..It is really helpful for a newbie like me..

I am a regular follower of your blog. Really very informative post you shared here.

Kindly keep blogging. If anyone wants to become a Front end developer learn from Javascript Training in Chennai .

or Javascript Training in Chennai.

Nowadays JavaScript has tons of job opportunities on various vertical industry. ES6 Training in Chennai

Post a Comment